2025 ChronoEdit: A Complete Guide to Time-Reasoning-Based Image Editing and World Simulation

🎯 Key Takeaways (TL;DR)

- ChronoEdit: A novel image editing framework developed by NVIDIA that treats image editing as a video generation task to ensure physical consistency and temporal coherence.

- Temporal Reasoning Stage: Introduces "temporal reasoning tokens" to simulate intermediate frames during the editing process, helping the model "think" and generate physically plausible editing trajectories.

- Outstanding Performance: Surpasses existing technologies in visual fidelity and physical plausibility, particularly excelling in scenarios requiring physical consistency (such as autonomous driving and humanoid robotics).

- Open Source Initiative: Provides Diffusers inference, DiffSynth-Studio LoRA fine-tuning, and complete model training infrastructure, with plans to release more lightweight models.

Table of Contents

- What is ChronoEdit?

- Why Do We Need ChronoEdit? Limitations of Existing Image Editing

- How Does ChronoEdit Work? Core Methods and Temporal Reasoning

- ChronoEdit Application Scenarios and Case Studies

- How to Get Started with ChronoEdit?

- ChronoEdit vs Qwen Edit: Advantages and Differences

- Frequently Asked Questions

- Summary and Action Recommendations

What is ChronoEdit?

ChronoEdit is an innovative image editing framework developed by NVIDIA, designed to address the challenges traditional image editing models face in maintaining physical consistency and coherence by introducing temporal reasoning capabilities. It reimagines static image editing tasks as video generation problems, leveraging large pre-trained video generation models to capture implicit physical laws governing object appearance, motion, and interaction, thereby generating more realistic and natural editing results.

💡 Pro Tip

The core of ChronoEdit lies in its unique "temporal reasoning stage," which enables the AI model to "think" about physical changes during the editing process like a human, rather than simply modifying pixels.

Why Do We Need ChronoEdit? Limitations of Existing Image Editing

Although current large generative models have made significant progress in image editing and contextual image generation, a critical gap remains when handling scenarios requiring physical consistency. For example, when editing objects in an image, models struggle to ensure that the edited object is physically credible—such as how the surrounding environment naturally changes when an object is picked up, or whether a car's trajectory is reasonable when turning. This capability is particularly crucial for "Physical AI" related tasks such as autonomous driving and robotics.

Traditional image editing models often focus only on the final editing effect while ignoring the physical evolution process from the original state to the edited state, resulting in generated images that may lack realism and logic.

How Does ChronoEdit Work? Core Methods and Temporal Reasoning

ChronoEdit effectively addresses the limitations of existing image editing through its unique pipeline design. Its workflow mainly includes two key stages:

1. Temporal Reasoning Stage

At the beginning of the denoising process, the model "imagines" and denoises a short trajectory containing intermediate frames. These intermediate frames are called "temporal reasoning tokens", which serve as guiding signals to help the model infer how the edit should unfold in a physically consistent manner.

Flowchart Description

2. Edited Frame Generation Stage

To improve efficiency, after the temporal reasoning stage, the reasoning tokens are discarded. The model then enters the edited frame generation stage, where it further refines the target frame and generates the final edited image.

Flowchart Description

Through this two-stage approach, ChronoEdit not only achieves high-quality image editing but also ensures that editing results are physically credible and coherent.

ChronoEdit Application Scenarios and Case Studies

ChronoEdit demonstrates powerful application potential in multiple fields, especially in scenarios requiring high-precision physical simulation and image editing.

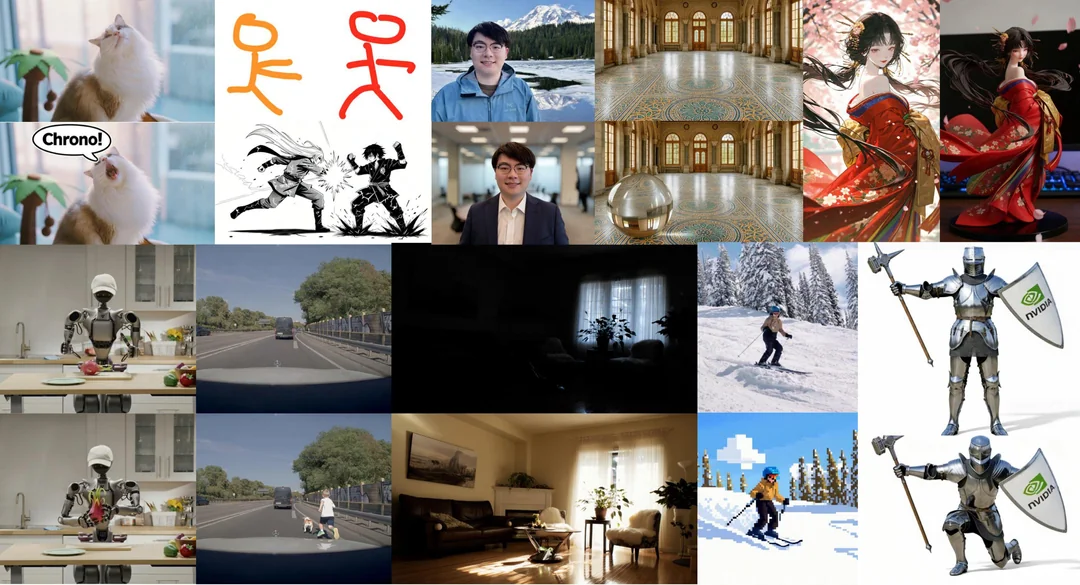

Image Editing Results

ChronoEdit can perform various complex image editing tasks while maintaining excellent visual quality and physical plausibility. Users can hover to view before-and-after comparisons.

Example Editing Types:

| Edit Type | Description |

|---|---|

| Pose Change | Change the pose of people or objects, such as rotating a person to a side view. |

| Character Consistency | Change the style or state while maintaining character features, such as turning a girl into a PVC figure. |

| Sketch-to-Image | Generate detailed images based on provided sketch structures. |

| Remove | Intelligently remove specific objects from images, such as removing glasses. |

| Edge Detection | Extract precise edge maps from input images. |

| Replace | Replace an object or background in an image with other content, such as replacing food with carrots or background with sunset forest. |

| Style Transfer | Convert images to specific artistic styles, such as converting skiing scenes to pixel art. |

| World Simulation | Simulate interactions and changes in the physical world, such as stirring paint or moving objects. |

| Add | Add new objects or elements to images, such as adding a cat on a bench. |

| Action | Simulate people or objects performing specific actions, such as a man fishing. |

Temporal Reasoning Visualization

ChronoEdit can visualize its "reasoning" process by denoising temporal reasoning tokens, showing the trajectory behind the edit. This is valuable for understanding how the model makes physical judgments.

💡 Pro Tip

Temporal reasoning tokens do not need to be fully denoised during inference, but in demonstrations, these tokens are optionally denoised into videos to show how the model thinks about and explains editing tasks.

Physical AI Related Tasks

ChronoEdit performs particularly well in Physical AI-related scenarios, generating edits that faithfully follow physical consistency, which is crucial for fields such as autonomous driving and robotics.

Physical AI Examples:

| Edit Type | Description |

|---|---|

| Action | Robot picking up dragon fruit. |

| World Simulation | Robot arm picking up potato and placing it on clipboard; black sedan moving forward; white car turning left; shooting basketball into net; placing blue item in shopping cart; putting toast in toaster; pouring water into cup until full; robot arm picking up silver kettle. |

| Character Consistency | Robot driving a car. |

| Remove | Remove all vegetables and plates from table. |

How to Get Started with ChronoEdit?

NVIDIA has open-sourced ChronoEdit and provided detailed guides to facilitate deployment and experimentation by developers and researchers.

1. Installation and Environment Setup

First, clone the ChronoEdit GitHub repository and create a Python environment.

git clone https://github.com/nv-tlabs/ChronoEdit cd ChronoEdit conda env create -f environment.yml -n chronoedit_mini conda activate chronoedit_mini pip install torch==2.7.1 torchvision==0.22.1 pip install -r requirements_minimal.txt

Optional: To speed up inference, install Flash Attention.

export MAX_JOBS=16 pip install flash-attn==2.6.3

Download the ChronoEdit-14B model weights from HuggingFace:

hf download nvidia/ChronoEdit-14B-Diffusers --local-dir checkpoints/ChronoEdit-14B-Diffusers

2. Diffusers Inference

ChronoEdit supports single GPU inference and inference with prompt enhancer.

Single GPU Inference

PYTHONPATH=$(pwd) python scripts/run_inference_diffusers.py \ --input assets/images/input_2.png --offload_model --use-prompt-enhancer \ --prompt "Add a sunglasses to the cat's face" \ --output output.mp4 \ --model-path ./checkpoints/ChronoEdit-14B-Diffusers

⚠️ Note

With the--offload_modelflag enabled, inference requires approximately 34GB GPU memory. In temporal reasoning mode, GPU memory requirements increase to approximately 38GB.

Using Prompt Enhancer

Add the --use-prompt-enhancer flag to enable automatic prompt enhancement. The Qwen/Qwen3-VL-30B-A3B-Instruct model is recommended by default for best results, but it requires up to 60GB peak memory.

✅ Best Practice

Users are strongly encouraged to read the Prompt Guidance for optimal results, or use an online LLM chat agent with the provided system prompt.

Using 8-Step Distilled LoRA

Inference speed can be optimized through distilled LoRA. Recommended hyperparameters are --flow-shift 2.0, --guidance-scale 1.0, and --num-inference-steps 8.

# Advanced usage with lora settings PYTHONPATH=$(pwd) accelerate launch scripts/train_diffsynth.py \ --dataset_base_path data/example_dataset \ --dataset_metadata_path data/example_dataset/metadata.csv \ --height 1024 \ --width 1024 \ --num_frames 5 \ --dataset_repeat 1 \ --model_paths '[["checkpoints/ChronoEdit-14B-Diffusers/transformer/diffusion_pytorch_model-00001-of-00014.safetensors","checkpoints/ChronoEdit-14B-Diffusers/transformer/diffusion_pytorch_model-00002-of-00014.safetensors","checkpoints/ChronoEdit-14B-Diffusers/transformer/diffusion_pytorch_model-00003-of-00014.safetensors","checkpoints/ChronoEdit-14B-Diffusers/transformer/diffusion_pytorch_model-00004-of-00014.safetensors","checkpoints/ChronoEdit-14B-Diffusers/transformer/diffusion_pytorch_model-00005-of-00014.safetensors","checkpoints/ChronoEdit-14B-Diffusers/transformer/diffusion_pytorch_model-00006-of-00014.safetensors","checkpoints/ChronoEdit-14B-Diffusers/transformer/diffusion_pytorch_model-00007-of-00014.safetensors","checkpoints/ChronoEdit-14B-Diffusers/transformer/diffusion_pytorch_model-00008-of-00014.safetensors","checkpoints/ChronoEdit-14B-Diffusers/transformer/diffusion_pytorch_model-00009-of-00014.safetensors","checkpoints/ChronoEdit-14B-Diffusers/transformer/diffusion_pytorch_model-00010-of-00014.safetensors","checkpoints/ChronoEdit-14B-Diffusers/transformer/diffusion_pytorch_model-00011-of-00014.safetensors","checkpoints/ChronoEdit-14B-Diffusers/transformer/diffusion_pytorch_model-00012-of-00014.safetensors","checkpoints/ChronoEdit-14B-Diffusers/transformer/diffusion_pytorch_model-00013-of-00014.safetensors","checkpoints/ChronoEdit-14B-Diffusers/transformer/diffusion_pytorch_model-00014-of-00014.safetensors"]]' \ --model_id_with_origin_paths "Wan-AI/Wan2.1-I2V-14B-720P:models_t5_umt5-xxl-enc-bf16.pth,Wan-AI/Wan2.1-I2V-14B-720P:Wan2.1_VAE.pth,Wan-AI/Wan2.1-I2V-14B-720P:models_clip_open-clip-xlm-roberta-large-vit-huge-14.pth" \ --learning_rate 1e-4 \ --num_epochs 5 \ --remove_prefix_in_ckpt "pipe.dit." \ --output_path "./models/train/ChronoEdit-14B_lora" \ --lora_base_model "dit" \ --lora_target_modules "q,k,v,o,ffn.0,ffn.2" \ --lora_rank 32 \ --extra_inputs "input_image" \ --use_gradient_checkpointing_offload

3. LoRA Fine-tuning and DiffSynth-Studio

ChronoEdit supports LoRA fine-tuning using DiffSynth-Studio:

pip install git+https://github.com/modelscope/DiffSynth-Studio.git

For detailed steps on training LoRA, please refer to the Dataset Doc.

4. Creating Your Own Training Dataset

ChronoEdit provides an automated edit annotation script that can generate high-quality editing instructions from image pairs (before and after editing). The script leverages advanced vision-language models and uses chain-of-thought (CoT) reasoning to analyze image pairs and generate precise editing prompts. For details, please refer to the Dataset Guide.

ChronoEdit vs Qwen Edit: Advantages and Differences

In Reddit discussions about ChronoEdit, users frequently compared it with Qwen Edit. Here are the main points:

| Feature | ChronoEdit | Qwen Edit |

|---|---|---|

| Core Mechanism | Treats image editing as video generation, focusing on temporal consistency and physical plausibility. | Traditional image editing model, may primarily focus on final image effects. |

| Image Quality | In Reddit user tests, generally believed to perform edits without degrading overall image quality. | Some users reported occasional degradation of overall image quality. |

| Physical Consistency | Introduces "temporal reasoning tokens" to explicitly simulate physical changes, ensuring physical plausibility of edits. | Less emphasis on physical consistency, may underperform in complex physical interaction scenarios. |

| Model Size | ChronoEdit-14B model is relatively small, with potential for efficient application through LoRA fine-tuning. | Qwen Edit model may be larger (users speculate 20B model). |

| Potential Compatibility | May be compatible with existing Wan LoRA due to being based on Wan model. | Compatibility not explicitly mentioned. |

| Facial Recognition | User feedback indicates poor performance in facial identity preservation. | User feedback indicates poor performance in facial identity preservation. |

💡 Pro Tip

ChronoEdit shows advantages in maintaining overall image quality and physical consistency, especially when handling scenarios requiring simulation of physical world changes. Its smaller model size also provides convenience for subsequent LoRA fine-tuning and deployment.

🤔 Frequently Asked Questions

Q: Does ChronoEdit support NSFW content generation?

A: According to Reddit discussions, ChronoEdit may not support generating NSFW (Not Safe For Work) content, even with added LoRA.

Q: How is ChronoEdit's inference speed?

A: After using 8-step distilled LoRA with recommended hyperparameters, inference efficiency can be significantly improved.

Q: How to understand ChronoEdit's "temporal reasoning"?

A: "Temporal reasoning" refers to the model generating a series of intermediate frames (reasoning tokens) during the editing process to simulate the physical evolution from the original image to the edited image, ensuring that editing results conform to physical laws rather than simply modifying pixels.

Q: Can ChronoEdit be used in ComfyUI workflows?

A: The Reddit community has shown strong interest in integrating ChronoEdit into ComfyUI, with users already sharing GGUF format models, indicating its potential for use in ComfyUI.

Q: How much GPU memory does the ChronoEdit-14B model require?

A: With the --offload_model flag enabled, approximately 34GB GPU memory is required; in temporal reasoning mode, approximately 38GB is needed.

Summary and Action Recommendations

ChronoEdit represents an important innovation in the field of image editing. By reimagining editing tasks as video generation and introducing temporal reasoning mechanisms, it significantly improves the physical consistency and visual fidelity of editing results. This has milestone significance for application scenarios requiring high realism and logical coherence (especially in the Physical AI field).

Action Recommendations:

- Explore Hugging Face Demo: Visit ChronoEdit Hugging Face Space to experience real-time editing features.

- Check GitHub Repository: Visit nv-tlabs/ChronoEdit GitHub for the latest code, installation guides, and model weights.

- Read Academic Paper: Gain in-depth understanding of ChronoEdit's theoretical foundation and technical details by reading the arXiv paper.

- Participate in Community Discussions: Join discussions in communities like Reddit (such as r/StableDiffusion) to get the latest usage tips, workflow sharing, and troubleshooting advice.

- Try LoRA Fine-tuning: For advanced users, try using DiffSynth-Studio to fine-tune ChronoEdit with LoRA to adapt to specific needs or produce higher quality outputs.