2025 Complete Guide: How to Build End-to-End OCR with HunyuanOCR

🎯 Key Takeaways (TL;DR)

- A single 1B multimodal architecture covers detection, recognition, parsing, translation, and more in one unified OCR pipeline.

- Dual inference paths (vLLM + Transformers) plus well-crafted prompts make rapid production deployment straightforward.

- In-house benchmarks show consistent gains over traditional OCR and general-purpose VLMs across spotting, document parsing, and information extraction.

Table of Contents

- What Is HunyuanOCR?

- Why Is HunyuanOCR So Strong?

- How to Deploy HunyuanOCR Quickly?

- How to Design Business-Ready Prompts?

- What Performance Evidence Exists?

- How Does the Inference Flow Work?

- FAQ

- Summary & Action Plan

What Is HunyuanOCR?

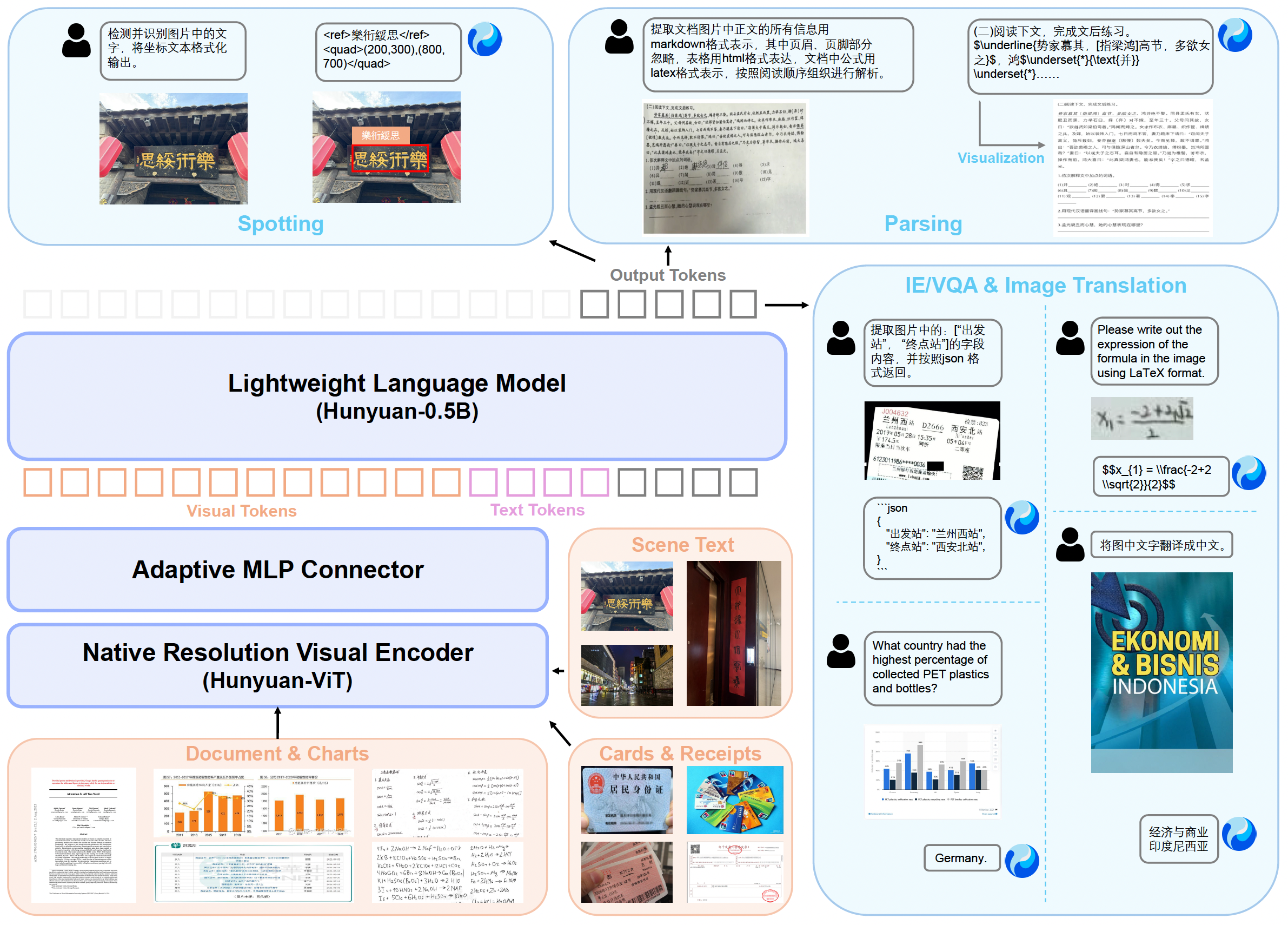

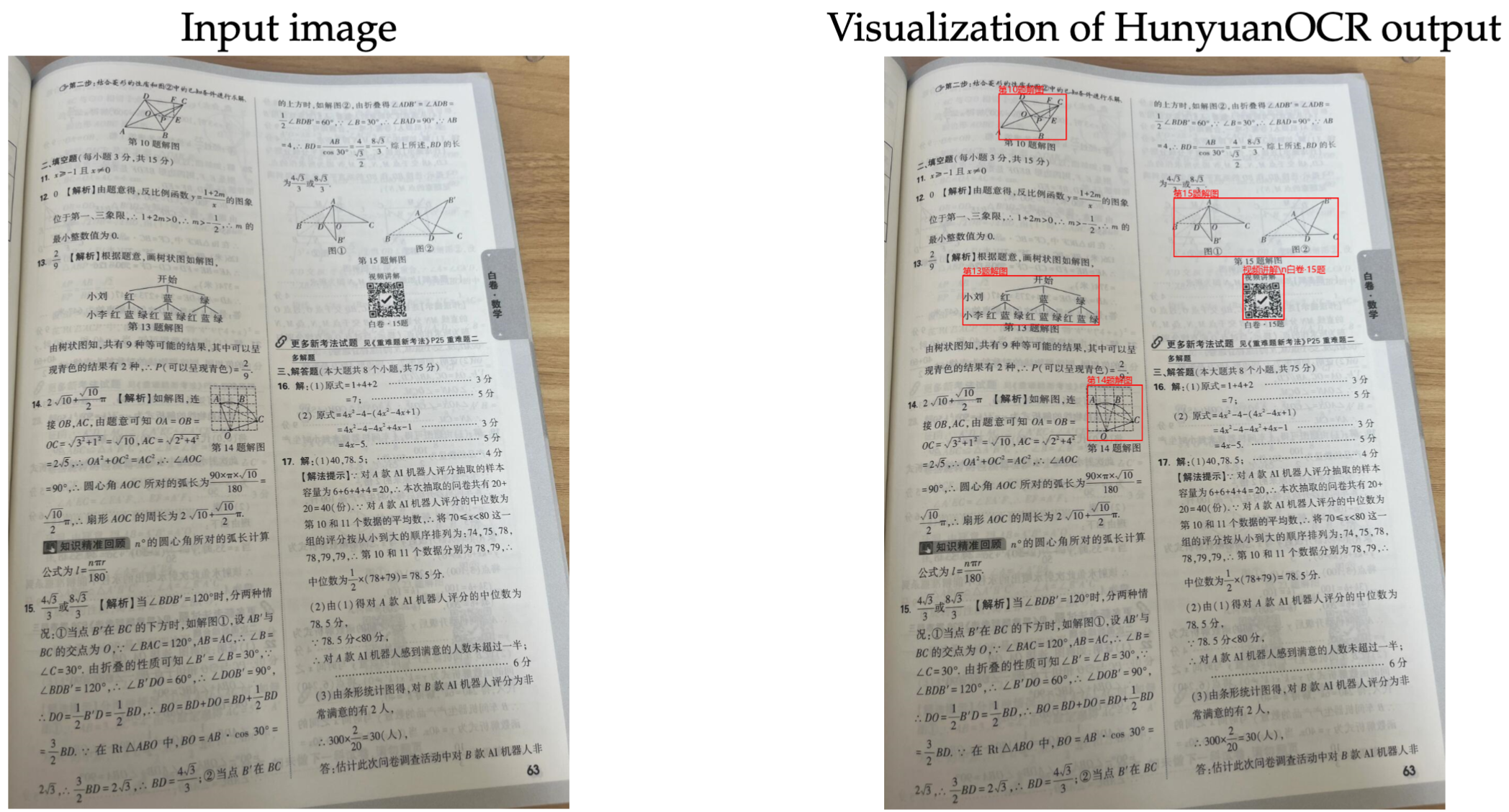

HunyuanOCR is Tencent Hunyuan’s end-to-end OCR-specific vision-language model (VLM). Built on a native multimodal architecture with only 1B parameters, it reaches state-of-the-art results on text spotting, complex document parsing, open-field information extraction, subtitle extraction, and image translation.

✅ Best Practice

Whenever you must process multilingual, multimodal, and complex layouts in one shot, prioritize an “single-prompt + single-inference” end-to-end model to cut pipeline latency drastically.

Why Is HunyuanOCR So Strong?

Lightweight, Full-Modal Coverage

- 1B native multimodal design: achieves SOTA quality with self-developed training strategy while keeping inference cost low.

- Task completeness: detection, recognition, parsing, info extraction, subtitles, and translation all handled within one model.

- Language breadth: supports 100+ languages across documents, street views, handwriting, tickets, etc.

True End-to-End Experience

- Single prompt → single inference: avoids cascading OCR error accumulation.

- Flexible output: coordinates, LaTeX, HTML, Mermaid, Markdown, JSON—choose whatever structure you need.

- Video-friendly: extracts bilingual subtitles directly for downstream translation or editing.

💡 Pro Tip

Tailor prompts to your business format (HTML tables, JSON fields, bilingual subtitles) to unleash structured outputs from the end-to-end pipeline.

How to Deploy HunyuanOCR Quickly?

System Requirements

- OS: Linux

- Python: 3.12+

- CUDA: 12.8

- PyTorch: 2.7.1

- GPU: NVIDIA CUDA GPU with 80GB memory

- Disk: 6GB

vLLM Deployment (Recommended)

pip install vllm --extra-index-url https://wheels.vllm.ai/nightly- Load

tencent/HunyuanOCRplusAutoProcessor. - Build messages containing image + instruction, then call

apply_chat_templatefor the prompt. - Configure

SamplingParams(temperature=0, max_tokens=16384). - Invoke

llm.generateand run post-processing (e.g.,clean_repeated_substrings).

Transformers Deployment

- Install the pinned branch:

pip install git+https://github.com/huggingface/transformers@82a06d... - Use

HunYuanVLForConditionalGenerationwithAutoProcessor. - Call

model.generate(..., max_new_tokens=16384, do_sample=False). - Note: This path currently trails vLLM in performance (official fix in progress).

⚠️ Heads-up

README scripts default to bfloat16 anddevice_map="auto". In multi-GPU setups, ensure memory sharding is deliberate to avoid distributed OOM.

How to Design Business-Ready Prompts?

Task Prompt Cheat Sheet

| Task | English Prompt | Chinese Prompt |

|---|---|---|

| Spotting | Detect and recognize text in the image, and output the text coordinates in a formatted manner. | 检测并识别图片中的文字,将文本坐标格式化输出。 |

| Document Parsing | Identify formulas (LaTeX), tables (HTML), flowcharts (Mermaid), and parse body text in reading order. | 识别图片中的公式/表格/图表并按要求输出。 |

| General Parsing | Extract the text in the image. | 提取图中的文字。 |

| Information Extraction | Extract specified fields in JSON; extract subtitles. | 提取字段并按 JSON 返回;提取字幕。 |

| Translation | First extract text, then translate; formulas → LaTeX, tables → HTML. | 先提取文字,再翻译;公式用 LaTeX,表格用 HTML。 |

Prompting Principles

- Structure first: explicitly request JSON/HTML/Markdown to reduce post-processing.

- Field enumeration: list all keys for information extraction to avoid missing items.

- Language constraints: specify target language for translation/subtitle tasks.

- Redundancy cleanup: apply substring dedupe helpers on long outputs.

What Performance Evidence Exists?

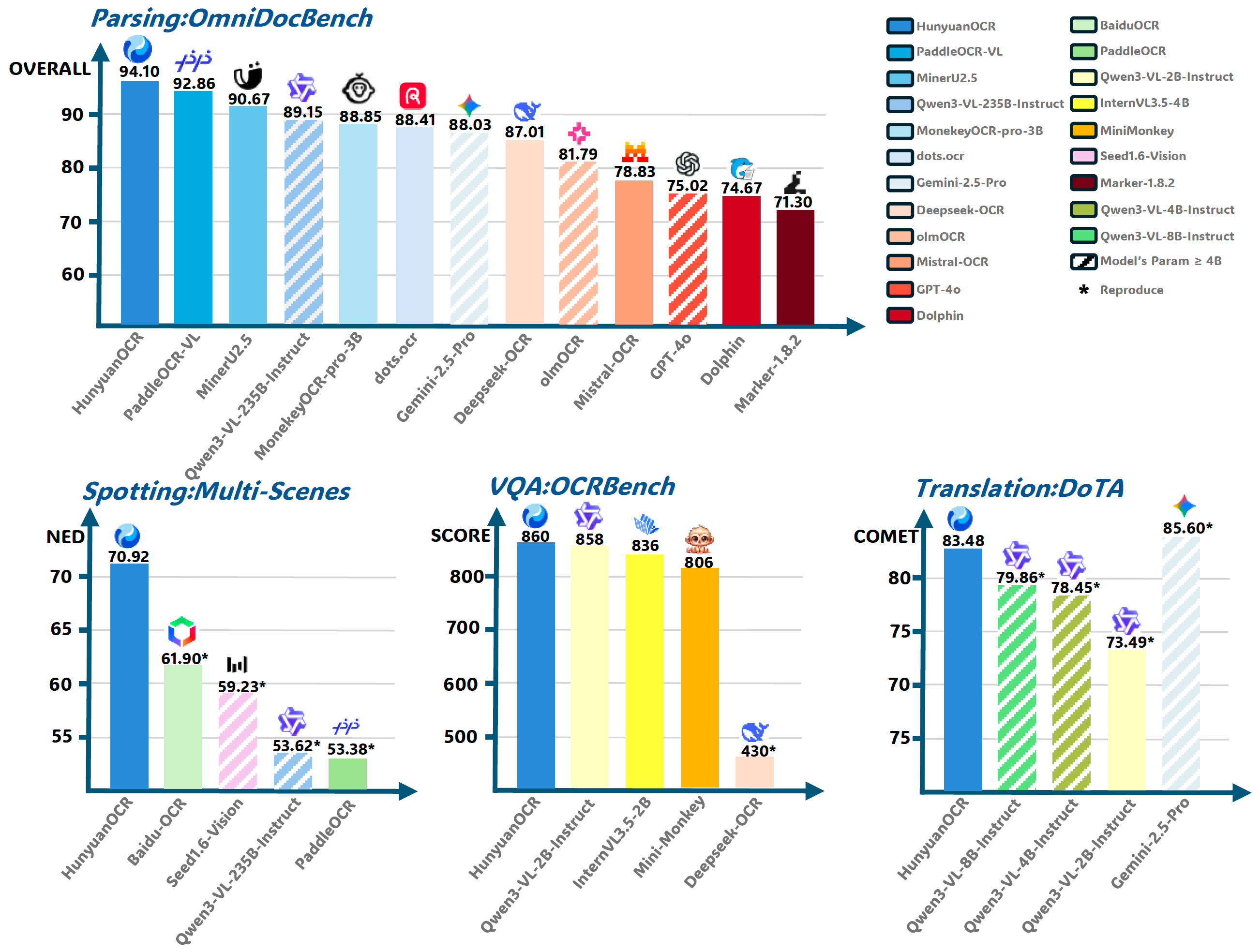

Text Spotting & Recognition (In-house Benchmark)

| Model Type | Method | Overall | Art | Doc | Game | Hand | Ads | Receipt | Screen | Scene | Video |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Traditional | PaddleOCR | 53.38 | 32.83 | 70.23 | 51.59 | 56.39 | 57.38 | 50.59 | 63.38 | 44.68 | 53.35 |

| Traditional | BaiduOCR | 61.90 | 38.50 | 78.95 | 59.24 | 59.06 | 66.70 | 63.66 | 68.18 | 55.53 | 67.38 |

| General VLM | Qwen3VL-2B-Instruct | 29.68 | 29.43 | 19.37 | 20.85 | 50.57 | 35.14 | 24.42 | 12.13 | 34.90 | 40.10 |

| General VLM | Qwen3VL-235B-Instruct | 53.62 | 46.15 | 43.78 | 48.00 | 68.90 | 64.01 | 47.53 | 45.91 | 54.56 | 63.79 |

| General VLM | Seed-1.6-Vision | 59.23 | 45.36 | 55.04 | 59.68 | 67.46 | 65.99 | 55.68 | 59.85 | 53.66 | 70.33 |

| OCR VLM | HunyuanOCR | 70.92 | 56.76 | 73.63 | 73.54 | 77.10 | 75.34 | 63.51 | 76.58 | 64.56 | 77.31 |

Document Parsing (OmniDocBench + Multilingual Benchmarks)

| Type | Method | Size | Omni Overall | Text | Formula | Table | Wild Overall | Text | Formula | Table | DocML |

|---|---|---|---|---|---|---|---|---|---|---|---|

| General VLM | Gemni-2.5-pro | - | 88.03 | 0.075 | 85.92 | 85.71 | 80.59 | 0.118 | 75.03 | 78.56 | 82.64 |

| General VLM | Qwen3-VL-235B | 235B | 89.15 | 0.069 | 88.14 | 86.21 | 79.69 | 0.090 | 80.67 | 68.31 | 81.40 |

| Modular VLM | MonkeyOCR-pro-3B | 3B | 88.85 | 0.075 | 87.50 | 86.78 | 70.00 | 0.211 | 63.27 | 67.83 | 56.50 |

| Modular VLM | MinerU2.5 | 1.2B | 90.67 | 0.047 | 88.46 | 88.22 | 70.91 | 0.218 | 64.37 | 70.15 | 52.05 |

| Modular VLM | PaddleOCR-VL | 0.9B | 92.86 | 0.035 | 91.22 | 90.89 | 72.19 | 0.232 | 65.54 | 74.24 | 57.42 |

| End-to-End VLM | Mistral-OCR | - | 78.83 | 0.164 | 82.84 | 70.03 | - | - | - | - | 64.71 |

| End-to-End VLM | Deepseek-OCR | 3B | 87.01 | 0.073 | 83.37 | 84.97 | 74.23 | 0.178 | 70.07 | 70.41 | 57.22 |

| End-to-End VLM | dots.ocr | 3B | 88.41 | 0.048 | 83.22 | 86.78 | 78.01 | 0.121 | 74.23 | 71.89 | 77.50 |

| End-to-End VLM | HunyuanOCR | 1B | 94.10 | 0.042 | 94.73 | 91.81 | 85.21 | 0.081 | 82.09 | 81.64 | 91.03 |

Information Extraction & VQA

| Model | Cards | Receipts | Video Subtitles | OCRBench |

|---|---|---|---|---|

| DeepSeek-OCR | 10.04 | 40.54 | 5.41 | 430 |

| PP-ChatOCR | 57.02 | 50.26 | 3.10 | - |

| Qwen3-VL-2B | 67.62 | 64.62 | 3.75 | 858 |

| Seed-1.6-Vision | 70.12 | 67.50 | 60.45 | 881 |

| Qwen3-VL-235B | 75.59 | 78.40 | 50.74 | 920 |

| Gemini-2.5-Pro | 80.59 | 80.66 | 53.65 | 872 |

| HunyuanOCR | 92.29 | 92.53 | 92.87 | 860 |

Image Translation

| Method | Size | Other2En | Other2Zh | DoTA (en2zh) |

|---|---|---|---|---|

| Gemini-2.5-Flash | - | 79.26 | 80.06 | 85.60 |

| Qwen3-VL-235B | 235B | 73.67 | 77.20 | 80.01 |

| Qwen3-VL-8B | 4B | 75.09 | 75.63 | 79.86 |

| Qwen3-VL-4B | 4B | 70.38 | 70.29 | 78.45 |

| Qwen3-VL-2B | 2B | 66.30 | 66.77 | 73.49 |

| PP-DocTranslation | - | 52.63 | 52.43 | 82.09 |

| HunyuanOCR | 1B | 73.38 | 73.62 | 83.48 |

💡 Pro Tip

For multilingual invoices, IDs, or subtitles, HunyuanOCR’s leadership on Cards/Receipts/Subtitles makes it a strong first choice.

How Does the Inference Flow Work?

📊 Implementation Flow

✅ Best Practice

Add guardrails at the end (empty-output detection, JSON schema validation) to shield downstream systems from malformed results.

🤔 FAQ

Q: How much GPU memory is required?

A: 80GB is recommended for 16K-token decoding. For smaller GPUs, reduce max_tokens, downsample images, or enable tensor parallelism.

Q: What’s the gap between vLLM and Transformers?

A: vLLM delivers better throughput and latency today and is the preferred path. Transformers currently lags but is ideal for custom ops or debugging until the fix lands upstream.

Q: How do I guarantee structured outputs?

A: Define the exact schema in the prompt, validate responses (regex/JSON schema), and apply helper functions like clean_repeated_substrings from the README.

Summary & Action Plan

- For multilingual, multi-format OCR workloads, evaluate HunyuanOCR’s single-model pipeline first to cut architectural complexity.

- Start with the vLLM recipe for fast PoC using the provided prompts and scripts, then iterate on prompt engineering and post-processing to meet production specs.

- Dive deeper via the HunyuanOCR Technical Report, Hugging Face demo, or by reproducing the visual examples from the README.

References

- Official README & README_zh

- Hugging Face Demo: https://huggingface.co/spaces/tencent/HunyuanOCR

- Model download: https://huggingface.co/tencent/HunyuanOCR

- Technical report: HunyuanOCR_Technical_Report.pdf